Based on the ideas in this post, I've made an improved and dynamic version to help in determining the violating records when inserting into a table:

http://sqlblogcasts.com/blogs/danny/archive/2008/01/12/scuffling-with-string-or-binary-data-would-be-truncated.aspx

create procedure cst_checktruncatederror as

begin

set nocount on

declare @src nvarchar(max) = 'sourcetable'

declare @tgt nvarchar(max) = 'targettable'

declare @cols nvarchar(max)

declare @sql nvarchar(max)

-- create temporary empty version of destination table (target proxy)

-- note: use a global temp table, because a normal one goes out-of-scope when the dynamic sql returns

if (object_id('tempdb..##target') is not null) drop table ##target

set @sql = concat('SELECT * INTO ##target FROM ',@tgt,' where 1=0')

print @sql

exec sp_executesql @sql

-- get a comma-separated string with block-quoted columnnames that are comparable

set ansi_padding on

set @sql = concat('

select @colsOUT =

substring

(

(

select concat('',['',column_name,'']'')

from information_schema.columns

where table_name = ''',@tgt,'''

and data_type <> ''geography''

for xml path(''''), type

).value(''.'',''nvarchar(max)'')

,2,100000

)

')

print @sql

exec sp_executesql @sql, N'@colsOUT nvarchar(max) OUTPUT', @colsOUT=@cols OUTPUT

print @cols

-- load source to target proxy

set @sql = concat('

INSERT INTO ##target (',@cols,')

SELECT ',@cols,'

FROM ',@src,'

')

print @sql

SET ANSI_WARNINGS OFF -- truncates fields instead of throwing "string or binary data would be truncated error" error!

-- 1. do not use * to insert, because the column order might be off

-- 2. wrap fieldnames in blockquotes [] because reserved words might have been used, e.g. STATE or UNIQUE

-- 3. exclude data types: geography

-- 4. TO DO: destination columns might be NULLable, while the source is not. That results in a different error

exec sp_executesql @sql

SET ANSI_WARNINGS ON

-- return violating records

set @sql = concat('

SELECT ',@cols,'

FROM ',@src,'

except

SELECT ',@cols,'

FROM ##target

')

print @sql

exec sp_executesql @sql

-- clean up

if (object_id('tempdb..##target') is not null) drop table ##target

end

Showing posts with label SQL. Show all posts

Showing posts with label SQL. Show all posts

LISTAGG / implode solution for SQL Server

Group multiple records into one and concatenate the values of the string/text values of a field:

ALTER view [dbo].[vcomments] as

with x as

(

select

cmt_domain,

cmt_indx,

cmt_seq,

concat(cmt_cmmt1,cmt_cmmt2,cmt_cmmt3,cmt_cmmt4,cmt_cmmt5,cmt_cmmt6,cmt_cmmt7,cmt_cmmt8,cmt_cmmt9,cmt_cmmt10,cmt_cmmt11,cmt_cmmt12,cmt_cmmt13,cmt_cmmt14,cmt_cmmt15) as cmt_cmmt,

cmt_lang

from

v_cmt_det_act

)

select

cmt_domain,

cmt_indx,

--FOR XML PATH returns a one record XML datatype in which all records are "unioned".

-- However, special characters are encoded as excape sequences, i.e. "<" becomes "<"

-- To reverse the encoding, get the entire root document/element as a value using the VALUE function.

-- The [1] is required because VALUE expects a singleton, i.e. exactly ONE element

rtrim(substring(

(

select char(10) + '[PAGE ' + cast(x2.cmt_seq as nvarchar(2)) + ']' + char(10) + x2.cmt_cmmt -- a field with no name gets no XML element to enclosed it. In short, the XML tag is removed

from x x2

where x1.cmt_domain = x2.cmt_domain and x1.cmt_indx = x2.cmt_indx

order by x2.cmt_seq

for XML PATH (''), type -- the brackets indicate the XML root element. Specifying an empty string as root element name removes it

).value('(/)[1]','nvarchar(max)')

,2,100000)) as cmt_cmmt

from

(

select distinct cmt_domain, cmt_indx

from x

) x1

ALTER view [dbo].[vcomments] as

with x as

(

select

cmt_domain,

cmt_indx,

cmt_seq,

concat(cmt_cmmt1,cmt_cmmt2,cmt_cmmt3,cmt_cmmt4,cmt_cmmt5,cmt_cmmt6,cmt_cmmt7,cmt_cmmt8,cmt_cmmt9,cmt_cmmt10,cmt_cmmt11,cmt_cmmt12,cmt_cmmt13,cmt_cmmt14,cmt_cmmt15) as cmt_cmmt,

cmt_lang

from

v_cmt_det_act

)

select

cmt_domain,

cmt_indx,

--FOR XML PATH returns a one record XML datatype in which all records are "unioned".

-- However, special characters are encoded as excape sequences, i.e. "<" becomes "<"

-- To reverse the encoding, get the entire root document/element as a value using the VALUE function.

-- The [1] is required because VALUE expects a singleton, i.e. exactly ONE element

rtrim(substring(

(

select char(10) + '[PAGE ' + cast(x2.cmt_seq as nvarchar(2)) + ']' + char(10) + x2.cmt_cmmt -- a field with no name gets no XML element to enclosed it. In short, the XML tag is removed

from x x2

where x1.cmt_domain = x2.cmt_domain and x1.cmt_indx = x2.cmt_indx

order by x2.cmt_seq

for XML PATH (''), type -- the brackets indicate the XML root element. Specifying an empty string as root element name removes it

).value('(/)[1]','nvarchar(max)')

,2,100000)) as cmt_cmmt

from

(

select distinct cmt_domain, cmt_indx

from x

) x1

Labels:

Database,

SQL,

SQL Server

No comments:

Query Active Directory from SQL Server

Get active directory server and LDAP servers in a domain

nltest /dclist:sub.dom.com

results amongst others in:

\\dcserver1.sub.dom.com -> dcserver1 is the AD server

Analyse active directory structure

Use Sysinternals ADExplorer to analyse the structure of active directory. Connect to server: dcserver1

Users in a group from command prompt

net group adtestgroup /domain

Active Directory structure in FROM clause

E.g. FROM "LDAP://sub.dom.com/OU=Groups,OU=Global,DC=sub,DC=dom,DC=com"

LDAP = case-sensitive protocol name, always this value

Before the slash: sub.dom.com, is the domain for which to find the AD structure

After the slash: the part of the tree to search. Specified from right to left. So in the example, from parent to child:

com -> dom -> sub -> Global -> Groups -> ADTestGroup, searches the nodes beneath the lowest level, which is ADTestGroup

The names ("dom", "Groups", etc), type ("OU", "CN", "DC") and depth of the levels are dynamic. So check with ADExplorer how AD is configured in the specific instance.

Users in a group from SQL Server

First, add a linked server to ADSI (fill with correct password for DOMAIN\ACCOUNT):

exec sp_addlinkedserver @server = 'ADSI', @srvproduct = 'Active Directory Services 2.5', @provider = 'ADSDSOObject', @datasrc = 'adsdatasource'

exec sp_addlinkedsrvlogin @rmtsrvname = 'ADSI', @useself = 'False', @locallogin = null, @rmtuser = 'DOMAIN\ACCOUNT', @rmtpassword = '********'

Select users in group "adtestgroup":

select * from openquery(ADSI,'

SELECT objectCategory, cn, sn, mail, name, department, company

FROM ''LDAP://sub.dom.com/DC=sub,DC=dom,DC=com''

WHERE MemberOf=''CN=adtestgroup,OU=Groups,OU=Global,DC=sub,DC=dom,DC=com''

ORDER BY cn

')

Unfortunately, dynamic sql is not possible inside a udf, so a procedure is needed:

alter procedure cst_usersingroup(@grp nvarchar(100))

as

begin

declare @sql as nvarchar(max) = '

select samaccountname as accountname, name, mail, department, title, company

from openquery(ADSI,''

SELECT objectCategory, cn, sn, mail, name, department, company, title, samaccountname

FROM ''''LDAP://sub.dom.com/DC=sub,DC=dom,DC=com''''

WHERE MemberOf=''''CN=' + @grp + ',OU=Groups,OU=Global,DC=sub,DC=dom,DC=com'''''')

x

order by samaccountname'

exec(@sql)

end

Select all BI_* and EDW_* groups from active directory:

select lower(cn) as grp, whencreated, whenchanged, distinguishedname

from openquery(ADSI,'

SELECT cn, distinguishedname, whencreated, whenchanged

FROM ''LDAP://sub.dom.com/OU=Groups,OU=Global,DC=sub,DC=dom,DC=com''

where CN=''bi_*'' or CN=''edw_*''

') x

order by cn

Notes:

nltest /dclist:sub.dom.com

results amongst others in:

\\dcserver1.sub.dom.com -> dcserver1 is the AD server

Analyse active directory structure

Use Sysinternals ADExplorer to analyse the structure of active directory. Connect to server: dcserver1

Users in a group from command prompt

net group adtestgroup /domain

Active Directory structure in FROM clause

E.g. FROM "LDAP://sub.dom.com/OU=Groups,OU=Global,DC=sub,DC=dom,DC=com"

LDAP = case-sensitive protocol name, always this value

Before the slash: sub.dom.com, is the domain for which to find the AD structure

After the slash: the part of the tree to search. Specified from right to left. So in the example, from parent to child:

com -> dom -> sub -> Global -> Groups -> ADTestGroup, searches the nodes beneath the lowest level, which is ADTestGroup

The names ("dom", "Groups", etc), type ("OU", "CN", "DC") and depth of the levels are dynamic. So check with ADExplorer how AD is configured in the specific instance.

Users in a group from SQL Server

First, add a linked server to ADSI (fill with correct password for DOMAIN\ACCOUNT):

exec sp_addlinkedserver @server = 'ADSI', @srvproduct = 'Active Directory Services 2.5', @provider = 'ADSDSOObject', @datasrc = 'adsdatasource'

exec sp_addlinkedsrvlogin @rmtsrvname = 'ADSI', @useself = 'False', @locallogin = null, @rmtuser = 'DOMAIN\ACCOUNT', @rmtpassword = '********'

Select users in group "adtestgroup":

select * from openquery(ADSI,'

SELECT objectCategory, cn, sn, mail, name, department, company

FROM ''LDAP://sub.dom.com/DC=sub,DC=dom,DC=com''

WHERE MemberOf=''CN=adtestgroup,OU=Groups,OU=Global,DC=sub,DC=dom,DC=com''

ORDER BY cn

')

Unfortunately, dynamic sql is not possible inside a udf, so a procedure is needed:

alter procedure cst_usersingroup(@grp nvarchar(100))

as

begin

declare @sql as nvarchar(max) = '

select samaccountname as accountname, name, mail, department, title, company

from openquery(ADSI,''

SELECT objectCategory, cn, sn, mail, name, department, company, title, samaccountname

FROM ''''LDAP://sub.dom.com/DC=sub,DC=dom,DC=com''''

WHERE MemberOf=''''CN=' + @grp + ',OU=Groups,OU=Global,DC=sub,DC=dom,DC=com'''''')

x

order by samaccountname'

exec(@sql)

end

Select all BI_* and EDW_* groups from active directory:

select lower(cn) as grp, whencreated, whenchanged, distinguishedname

from openquery(ADSI,'

SELECT cn, distinguishedname, whencreated, whenchanged

FROM ''LDAP://sub.dom.com/OU=Groups,OU=Global,DC=sub,DC=dom,DC=com''

where CN=''bi_*'' or CN=''edw_*''

') x

order by cn

Notes:

- In most cases, all domain users can query AD using the basic search method specified before. More advanced AD search methods might be disabled and require special rights

Labels:

Database,

SQL,

SQL Server

No comments:

Create schema with separate autorization

Perform the following steps in SQL Server to create a schema called "bi" and give create view rights to user "domain\testuser":

CREATE SCHEMA [bi]

CREATE ROLE [db_bischema]

ALTER AUTHORIZATION ON SCHEMA::bi TO [db_bischema]

GRANT CREATE VIEW TO [db_bischema] -- "create view" includes the power to drop views

ALTER ROLE [db_bischema] ADD MEMBER [domain\testuser]

Advantages:

CREATE SCHEMA [bi]

CREATE ROLE [db_bischema]

ALTER AUTHORIZATION ON SCHEMA::bi TO [db_bischema]

GRANT CREATE VIEW TO [db_bischema] -- "create view" includes the power to drop views

ALTER ROLE [db_bischema] ADD MEMBER [domain\testuser]

Advantages:

- If views are removed from or added to the schema, autorization does not need to be added to each individual view

- Give certain users the rights to (re)create the views inside the schema, without the risk of modification of the rest of the database

Labels:

Database,

SQL,

SQL Server

No comments:

Packing intervals

Solution to the "packing intervals" problem by Itzik Ben-Gan:

http://www.solidq.com/sqj/Pages/2011-March-Issue/Packing-Intervals.aspx

Scroll to code listing 4 for the SQL code.

Registration is required to copy and paste the code. It can only be copy and pasted from the PDF that can be downloaded after registration.

http://www.solidq.com/sqj/Pages/2011-March-Issue/Packing-Intervals.aspx

Scroll to code listing 4 for the SQL code.

Registration is required to copy and paste the code. It can only be copy and pasted from the PDF that can be downloaded after registration.

Labels:

SQL,

SQL Server

No comments:

Count of weekend days in date range

Use the formula in "calc_name" or "calc_df" to calculate the number of inclusive weekend dates in a date range. Inclusive means that if e.g. the start date is a Saturday, it is counted as 1. The code is pure SQL Server T-SQL, with no need for helper tables or cursors.

Note:

SELECT

x.*,

(DATEDIFF(wk, sd, ed) * 2)

+(CASE WHEN DATENAME(dw, sd) = 'Sunday' THEN 1 ELSE 0 END)

+(CASE WHEN DATENAME(dw, ed) = 'Saturday' THEN 1 ELSE 0 END) as calc_name,

DATEDIFF(wk, sd, ed) * 2

+CASE DATEPART(dw, sd)+@@datefirst WHEN 8 THEN 1 ELSE 0 END

+CASE DATEPART(dw, ed)+@@datefirst WHEN 7 THEN 1 WHEN 14 THEN 1 ELSE 0 END as calc_df,

@@DATEFIRST as datefirst,

(DATEDIFF(wk, sd, ed) * 2) as weeks,

CASE WHEN DATENAME(dw, sd) = 'Sunday' THEN 1 ELSE 0 END as sun_start,

CASE DATEPART(dw, sd)+@@datefirst WHEN 8 THEN 1 ELSE 0 END as sun_start_df,

CASE WHEN DATENAME(dw, ed) = 'Saturday' THEN 1 ELSE 0 END as sat_end,

CASE DATEPART(dw, ed)+@@datefirst WHEN 7 THEN 1 WHEN 14 THEN 1 ELSE 0 END as sat_end_df,

DATEPART(dw, sd)+@@datefirst as sun_df,

DATEPART(dw, ed)+@@datefirst as sat_df

from

(

select 1 as correct, '2013/3/17' as sd, '2013/3/22' as ed union

select 2 as correct, '2013/3/16' as sd, '2013/3/22' as ed union

select 2 as correct, '2013/3/16' as sd, '2013/3/17' as ed union

select 3 as correct, '2013/3/16' as sd, '2013/3/23' as ed union

select 3 as correct, '2013/3/10' as sd, '2013/3/22' as ed

) x

Note:

- "Set datefirst" is not needed, but can be used to check the correct outcome of the "calc_df" calculation

- The "calc_df" function is more robust, since it does not rely on localized day names. But it is a bit more verbose and obfuse then "calc_name"

- Column "correct" contains the correct number of weekend dates that can be used as a reference for the calculation

- Subquery X contains some random test values for start- and enddates

SELECT

x.*,

(DATEDIFF(wk, sd, ed) * 2)

+(CASE WHEN DATENAME(dw, sd) = 'Sunday' THEN 1 ELSE 0 END)

+(CASE WHEN DATENAME(dw, ed) = 'Saturday' THEN 1 ELSE 0 END) as calc_name,

DATEDIFF(wk, sd, ed) * 2

+CASE DATEPART(dw, sd)+@@datefirst WHEN 8 THEN 1 ELSE 0 END

+CASE DATEPART(dw, ed)+@@datefirst WHEN 7 THEN 1 WHEN 14 THEN 1 ELSE 0 END as calc_df,

@@DATEFIRST as datefirst,

(DATEDIFF(wk, sd, ed) * 2) as weeks,

CASE WHEN DATENAME(dw, sd) = 'Sunday' THEN 1 ELSE 0 END as sun_start,

CASE DATEPART(dw, sd)+@@datefirst WHEN 8 THEN 1 ELSE 0 END as sun_start_df,

CASE WHEN DATENAME(dw, ed) = 'Saturday' THEN 1 ELSE 0 END as sat_end,

CASE DATEPART(dw, ed)+@@datefirst WHEN 7 THEN 1 WHEN 14 THEN 1 ELSE 0 END as sat_end_df,

DATEPART(dw, sd)+@@datefirst as sun_df,

DATEPART(dw, ed)+@@datefirst as sat_df

from

(

select 1 as correct, '2013/3/17' as sd, '2013/3/22' as ed union

select 2 as correct, '2013/3/16' as sd, '2013/3/22' as ed union

select 2 as correct, '2013/3/16' as sd, '2013/3/17' as ed union

select 3 as correct, '2013/3/16' as sd, '2013/3/23' as ed union

select 3 as correct, '2013/3/10' as sd, '2013/3/22' as ed

) x

Labels:

SQL,

SQL Server

No comments:

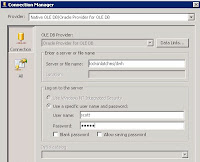

Oracle OLE DB source in SSIS

An OLE DB source to Oracle can be added to a SSIS package.

An OLE DB source to Oracle can be added to a SSIS package.Example data

Server: locksnlatches.mydomain.com

Oracle instance/SID: dwh

Username/schema: scott

Password: tiger

An OLE DB source to Oracle can be added as follows:

- From the menubar, select SSIS -> New connection... -> OLEDB -> Add -> New

Provider: Oracle Provider for OLE DB

Server or filename: locksnlatches/dwh

User name: scott

Password: tiger

Notes:

- The "Oracle Provider for OLE DB" provider always exists in SSIS. It is a wrapper around the Oracle Client driver that is installed when installing Integration Services. However, the Oracle Client has to be installed separately. It can be downloaded from the Oracle website: instantclient-basiclite-nt-11.2.0.2.0. Install the 32 bit version of the driver to be able to use it in the design environment. The Visual Studio / SSIS IDE does not support 64 bit components

- It is possible to use the 64 bit Oracle Client driver during execution of the package, e.g. via dtexec, but it needs to be installed additionally. The SSIS package has to be configured to use this different version during runtime.

- If the server cannot be found, then use the fully qualified name. One reason might be that the Oracle server is located in a different (Windows) domain as the SSIS server.

Labels:

Oracle,

SQL,

SQL Server,

SSIS

No comments:

Query Oracle from SQL Server

- Install the Oracle Instant Client (OIC) on the machine where SQL Server is running and add the "Oracle Provider for OLE DB" driver, e.g. to folder c:\oracle11g.

- For a SQL Server x64 version running on a Windows x64 OS download the instantclient-basiclite-windows.x64-11.2.0.2.0.zip. This is a 22 MB download.

- Then add the Oracle Data Access components, to get the "OLE DB for Oracle" driver:

http://www.oracle.com/technetwork/database/windows/downloads/index-090165.html

At the time of writing (March 2012): select the "64-bit ODAC 11.2 Release 4 (11.2.0.3.0) Xcopy for Windows x64". This is a 54 MB download - Inside SQL Server Management Studio:

Linked Servers -> Providers -> OraOLEDB.Oracle -> Allow inprocess = true - Optional: to enable adhoc querying (querying without a linked server), then enable this:

- SQL Server 2005 via surface area configuration tool

- SQL Server 2008 via facets: inside SQL Server Management Studio, right click the server -> facets -> facet: surface area configuration -> AdHocRemoteQueries = true

- Optional: if the connection to Oracle should be based on a TNS names entry, then add an "admin" folder to the OIC folder. Put or create an tnsnames.ora file there, e.g. c:\oracle11g\admin\tnsnames.ora

- Optional: use "tnsping" to test the entry from the command prompt, e.g. "tnsping dwh.world". If it doesn't recognize the entry, then create an environment variable called "TNS_ADMIN" and let it point to this "admin" folder, e.g. c:\oracle11g\admin

- Optional: if the following steps to get data from an Oracle instance don't work, then edit this registry key: HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\MSDTC\MTxOCI.

Set the following values:

OracleXaLib=oraclient11.dll

OracleSqlLib=orasql11.dll

OracleOciLib=oci.dll

Note: these DDLs can be found in the c:\oracle11g\product\11.2.0\client_1\BIN

folder. The specific version depends on the Oracle Client and might be for example oraclient10.dll en orasql10.dll. Use the names of the DDLs as found in this folder.

Three ways to get the data from an Oracle instance

Example:

Example:

Server: locksnlatches

Oracle instance (SID): dwh

Port: 1521

Schema/user: scott

Password: tiger

tnsnames.ora entry:

dwh.world =

(DESCRIPTION=

(CID=DWH_APP)

(ADDRESS_LIST=

(ADDRESS=

(PROTOCOL=TCP)(HOST=locksnlatches)(PORT=1521)

)

)

(CONNECT_DATA=

(SID=dwh)

)

)

Example:

Example:Server: locksnlatches

Oracle instance (SID): dwh

Port: 1521

Schema/user: scott

Password: tiger

tnsnames.ora entry:

dwh.world =

(DESCRIPTION=

(CID=DWH_APP)

(ADDRESS_LIST=

(ADDRESS=

(PROTOCOL=TCP)(HOST=locksnlatches)(PORT=1521)

)

)

(CONNECT_DATA=

(SID=dwh)

)

)

1) Using a linked server

- Linked Servers -> New linked server... -> General ->

- linked server: lnk_locksnlatches (this name can be anything)

- provider: Oracle provider for OLE DB

- product name: oracle (this name can be anything)

- data source: (DESCRIPTION=(CID=DWH_APP)(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=locksnlatches)(PORT=1521)))(CONNECT_DATA=(SID=dwh)))

- provider string: leave empty!

- location: leave empty!

- catalog: leave empty!

- Linked Servers -> New linked server... -> Security ->

- Be made using this security context

- Remote login: scott

- With password: tiger

Use the four-point notation to retrieve data from Oracle:

SELECT 1 FROM lnk_locksnlatches..scott.dual

Note: the "security" tab specifies the Oracle schema/user and password used by the connection!

2) Without a linked

server (TNS-less)

SELECT * FROM OPENROWSET('OraOLEDB.Oracle',

'(DESCRIPTION=(CID=DWH_APP)(ADDRESS_LIST= (ADDRESS=(PROTOCOL=TCP)(HOST=locksnlatches)(PORT=1521)))(CONNECT_DATA=(SID=dwh)))';'scott';'tiger','SELECT 1 FROM DUAL') x

3) Without a linked

server (TNS-full)

SELECT * FROM OPENROWSET('OraOLEDB.Oracle',

'dwh.world';'scott';'tiger','SELECT 1 FROM DUAL') x

Labels:

Database,

Oracle,

SQL,

SQL Server

No comments:

Power view on analysis services tabular

How to create a Power View report using Sharepoint 2010 and Analysis Services 2012 Tabular as the data source?

How to create a Power View report using Sharepoint 2010 and Analysis Services 2012 Tabular as the data source?0) Prerequisites

A server called "bisql01" with the following services installed:

- sql server 2012 (mssqlserver = default instance)

- analysis services 2012 (mssqlserver = default instance)

- analysis services 2012 tabular mode / vertipaq (astabular = named instance)

- reporting services (mssqlserver = default instance)

- sharepoint 2010 installed at https://powerview.locksnlatches.com

INSIDE VISUAL STUDIO 2012

1) create project

new project -> analysis services tabular project

name: cp_windows7

2) set deploy target of model

rightclick project "cp_windows7" -> properties ->

server: localhost\astabular

database: cpdm

impersonation settings: default

note: there is probably also a default instance of analysis services running; this is the regular multidimensional cube instance like the previous versions of sql server, not the named tabular model instance

note: the cpdm tabular database will be created on deploy

note: there will also be a work tabular database for the current project named after the project, first data source and a guid, e.g. CPDV_BI01_SQL_01e0810e-c8ab-46fd-afe6-098348336a9a. Do not delete this database from the analysis server server!

3) add tables to model

model -> import from datasource -> microsoft sql server:

server name: bisql01 (or "localhost")

log on to the server: use windows authentication

database: cpdv

use service account,

select tables/views,

finish

edit the bism model: model -> model view -> diagram view. If there are no relationships in the source, then they can be added here by dragging and dropping fields from a child to a parent table or vice versa.

change connection type: model -> existing connections -> edit

use sql server authentication

name: sa

password: ***

note: use model -> existing connections, to add tables later on from the same connection

4) set date dimension

view the data: model -> model view -> data view

click the date column, table -> date -> mark as date table

note: mark as date table does not work in the diagram view, only in the data view

note: make sure the date column is unique and continuous

note: a fact table can join to the date dimension on this date field, but also on a unique number field. A date field also needs to be selected in the latter case for "mark as date table" to work.

5) set authorization

models -> roles -> new

name: reader

permissions: read

models -> roles -> members -> add

cloud\bi01_sql

cloud\bi01_admin (=sharepoint account from which powerview is started)

note: if members are added to this role from management studio to the tabular model instance database then they will be overwritten at the next deploy of the project! So, specify the members in the project.

6) build -> deploy

INSIDE SHAREPOINT 2010

7) create semantic model connection

documents -> new document -> bi semantic model connection ->

filename: model.bism (this is the name of the document in sharepoint; it can be anything)

servername: bisql01\astabular

database: cpdm

note: to fix error "database cannot be found", double check that the reader role has read permissions and that the windows accounts used by Sharepoint are added to that group

8) create report data source to semantic model connection

documents -> new document -> report data source ->

data source type: microsoft bi semantic model for power vier

connection string: datasource='https://powerview. locksnlatches.com/Shared%20Documents/model.bism'

credentials: stored credentials

username: cloud\bi01_sql

password: ***

availability: enable

9) create powerview report

click dropdown arrow of report data source -> create power view report

note: to fix error "User 'DOMAIN\user' does not have required permissions": start IE in "run as administrator" mode and connect using "localhost" iso. the server name.

note: the reporting services logfiles are located here C:\Program Files\Common Files\Microsoft Shared\Web Server Extensions\14\WebServices\LogFiles

SQL Server statistics

Statistics are information about the distribution of data in a column, maintained internally by the database engine.

Rules and behaviour with regard to statistics, when automatic creation/updating is turned on:

http://www.sql-server-performance.com/tips/update_statistics_p1.aspx

http://blogs.technet.com/b/rob/archive/2008/05/16/sql-server-statistics.aspx

http://technet.microsoft.com/nl-nl/library/cc966419(en-us).aspx

Rules and behaviour with regard to statistics, when automatic creation/updating is turned on:

- Statistics are automatically created when creating an index, but only for the first column of the (composite) index.

- Statistics can be created manually on any column or combination of columns.

- Statistics are automatically created/updated when the query optimizer finds need for them.

- Statistics are not automatically created/updated when the server is too busy, unless the "update statistics asynchronously" option is turned on, because then the are created in parallel.

- Asynchronously created/updated statistics have effect the next time the (causing) query is run.

http://www.sql-server-performance.com/tips/update_statistics_p1.aspx

http://blogs.technet.com/b/rob/archive/2008/05/16/sql-server-statistics.aspx

http://technet.microsoft.com/nl-nl/library/cc966419(en-us).aspx

Labels:

SQL,

SQL Server

No comments:

Derived table vs subquery

There are several ways to combine datasets:

The "cross apply" combines the best features of the subquery and join, but is performance-wise not always the best choice.

- Subquery: SELECT clause, correlation

- Join (cross/left/right/inner/full): FROM clause, no correlation

- Cross apply: FROM clause, correlation

The "cross apply" combines the best features of the subquery and join, but is performance-wise not always the best choice.

Labels:

SQL

No comments:

Run SSMS using different Windows account

You can log on to a SQL Server database using SQL Server Management Studio (SSMS) with a Windows Authentication login / NT account / Windows login.

However, the Active Directory domain account to do this with cannot be entered. It is greyed out and always the account of the user that is currently logged on.

There are several ways to bypass this and make a connection:

1) Run SSMS under different privileges

Note: The greyed-out login screen in SSMS still displays the logged on user, not the runas account. This might be confusing.

1a) Run SSMS under different privileges, without needing to enter a password

3) Set credentials for a remote server using Credential Manager

4) Set terminal services Active Directory rights

Get the "allow logon to terminal services" right for the remote server for the domain account. Then make a terminal services connection to the server with that account and start SSMS within that session.

Links:

http://www.olegsych.com/2009/05/crossing-domain-boundaries-windows-authentication/

However, the Active Directory domain account to do this with cannot be entered. It is greyed out and always the account of the user that is currently logged on.

There are several ways to bypass this and make a connection:

1) Run SSMS under different privileges

- Create a shortcut to SSMS with the following command:

runas /netonly /user:domain\usr "g:\Program Files (x86)\Microsoft SQL Server\90\Tools\Binn\VSShell\Common7\IDE\SqlWb.exe" - Double-click the shortcut and enter password for the runas account

Note: The greyed-out login screen in SSMS still displays the logged on user, not the runas account. This might be confusing.

1a) Run SSMS under different privileges, without needing to enter a password

- Download "runasspc" (can be easily found with a search engine)

- Extract "runasspc.exe" from the zip file and copy to the windows folder

- Create a shortcut to SSMS with the following command:

runassprc "g:\Program Files (x86)\Microsoft SQL Server\90\Tools\Binn\VSShell\Common7\IDE\SqlWb.exe" /domain:domain /user:usr /password:pwd

- Open a command windows (Win+R, cmd.exe)

- Delete all mappings: net use * /delete /y

- Create mapping to SQL Server server: net use \\servername /user:domain\usr

- Start SQL Server Configuration Manager via Programs (or locate SQLServerManager.msc directly)

- Open SQL Native Client Configuration

- Enable Named Pipes protocol and move to top in the order

- Add a new alias. Use:

a. "Named Pipes" as network library

b. Server name: fill in remote server name. The pipe field will display: \\[servername]\pipe\sql\query" - Start SSMS

a. Server name: fill in remote server name

b. Authentication: Windows Authentication

c. Connection Properties -> Network Protocol, set to Named Pipes

3) Set credentials for a remote server using Credential Manager

- Control Panel -> Credential Manager

- Add the domain\account and password for the remote server

4) Set terminal services Active Directory rights

Get the "allow logon to terminal services" right for the remote server for the domain account. Then make a terminal services connection to the server with that account and start SSMS within that session.

Links:

http://www.olegsych.com/2009/05/crossing-domain-boundaries-windows-authentication/

Labels:

Database,

OS,

SQL

No comments:

Identifying keys

A key that is made up of one field is a simple key. All other keys are combined keys. There are two types of combined keys, compound and composite:

Simple key: key that consists of one field

Combined key: key that consists of more than one field

Compound key: combined key in which each field is itself a simple key (of another entity)

Composite key: combined key, that is not a compound key

A new term I have made up myself:

Concatenated key: a simple key, that is the concatenation of the fields in a combined key

Simple key: key that consists of one field

Combined key: key that consists of more than one field

Compound key: combined key in which each field is itself a simple key (of another entity)

Composite key: combined key, that is not a compound key

A new term I have made up myself:

Concatenated key: a simple key, that is the concatenation of the fields in a combined key

Labels:

Database,

Oracle,

SQL

No comments:

Cannot load package & required privilege is not held by the client

How to start a filesystem dtsx package from sql agent, without a config file or without adding a package password to the command line:

- ssis: make note of the package owner, should be windows (domain) account

- ssis: package: encrypt with userkey

- os: sql agent service should run under LocalSystem account

- os: add package owner to local groups: SQLServer2005DTSUser$SERVER, SQLServer2005SQLAgent$SERVER

- ssms: create credential for package owner

- ssms: create proxy using created credential, add all principals, add all subsystems

- ssms: use the created proxy as "run as" user for the job step that starts the package

There is no easy option to specify the password for a package from sql agent, hence the "encrypt with userkey".

See also: http://support.microsoft.com/kb/918760

Background information

Principals have access to a proxy. A proxy is a mapping to a credential. A credential is a mapping to a Windows account.

1. SSMS / Security / Credential, create a credential. Set identity to an existing account that has the appropriate rights to run the job.

2. SQL Agent / Proxies / SSIS Package Execution, create a new proxy

2a. Proxy / General / Credential name: select the credential from step 1

2b. Proxy / General / Subsystems: select all

2c. Proxy / Principals / MSDB Role, add principals: sqlagentuserrole, sqlagentreaderrole, sqlagentoperatorrole. This are the principals that can access the proxy.

2d. The account must have "logon as a batch job" privileges (check with secpol.msc)

4. Set SQL Server Agent service account to LocalSystem

5. If needed, run a job step under another account. Typically: a SQL Server account based on a Windows account: SQL Server Agent / Jobs / job / Steps / Edit / Advanced / Run as user,

- select proxy

- type SSIS package

- package source File system

If the "A required privilege is not held by the client" error occurs (from Microsoft website):

1. Set the SQL Server Agent service account in SQL Server Configuration Manager to the LocalSystem account.

2. Stop and then start the SQL Server Agent service.

3. Reset the SQL Server Agent service account in SQL Server Configuration Manager back to the original account.

4.Stop and then start the SQL Server Agent service.

From Marc at Stackoverflow:

So it boils down to really just two accounts:

one account is needed to run the SQL Server Agent Windows service - this is a Windows account on your machine / server which needs to have enough permissions to run the service, start and stop it - either use LocalSystem, Network Service, or whatever other Windows account you have to run services with

The other account would be the account to run your SQL Server Agent steps under - that's typically a SQL Server account (which could be based on a Windows account), and it needs enough privileges inside SQL Server to do its job, e.g. it needs access to the database objects and all. I would strive to have just one account for each app that runs the SQL Server jobs - makes life a whole lot easier!

From Influent at SQLTeam:

1. set ProtectionLevel to DontSaveSensitive in package properties in Visual Studio (using SSPI anyway so not sure it mattered)

2. created configuration file (had to alter path to config file in dtsx file since it's different on servers than in dev environment)

3. instead of importing the package into MSDB, set the job step Package Source to "File system" and pointed to dtsx file on local drive of server

4. set the Configurations tab of the job step to point to the dtsConfig file created in step 2

5. made sure the AD user kicking off the job was part of local server groups: Administrators, SQLServer2005DTSUser$SERVER, and SQLServer2005SQLAgent$SERVER (I eventually removed Administrator permissions because I only needed it to log to Windows Event Log)

6. made the associated SQL user to the user in step 5 part of the SQLAgentUserRole in the msdb database

7. made sure the local user assigned to the SQL Agent service was part of local server groups: Administrators, SQLServer2005DTSUser$SERVER, and SQLServer2005SQLAgent$SERVER

From xprog.blogspot.com:

1) Executed as user: DOMAIN\user. The process could not be created for step 1 of job 0xB013D0354C8CBD46B79E948740EF5441 (reason: 1314). The step failed.

The error 1314 is "A required privilege is not held by the client".

This message indicates that the SQL Server Service account doesn't have the required rights to switch the security context to the proxy account.

To fix it verify:

1. The proxy account have the "Act as part of the operating system" privilege.

2. The SQL Server Account is in the group

SQLServer2005MSSQLUser$$

3. The Proxy account is in the group

SQLServer2005DTSLUser$$

SQL Server Agent Account must have 'Adjust memory quotas for a process' (or 'Increase quotas' in pre-Windows 2003) privilege on SQL Server.

From FriggleFragle at MSDN:

an issue i found was that sql server agent and ssis had been changed to not use the local system account. this caused me to continually get the dreaded --> "A required privilege is not held by the client" error. i fought with it all afternoon and eventually updated the services to use the local system account and it worked. perhaps this will save some other as much time as i wasted.

- ssis: make note of the package owner, should be windows (domain) account

- ssis: package: encrypt with userkey

- os: sql agent service should run under LocalSystem account

- os: add package owner to local groups: SQLServer2005DTSUser$SERVER, SQLServer2005SQLAgent$SERVER

- ssms: create credential for package owner

- ssms: create proxy using created credential, add all principals, add all subsystems

- ssms: use the created proxy as "run as" user for the job step that starts the package

There is no easy option to specify the password for a package from sql agent, hence the "encrypt with userkey".

See also: http://support.microsoft.com/kb/918760

Background information

Principals have access to a proxy. A proxy is a mapping to a credential. A credential is a mapping to a Windows account.

1. SSMS / Security / Credential, create a credential. Set identity to an existing account that has the appropriate rights to run the job.

2. SQL Agent / Proxies / SSIS Package Execution, create a new proxy

2a. Proxy / General / Credential name: select the credential from step 1

2b. Proxy / General / Subsystems: select all

2c. Proxy / Principals / MSDB Role, add principals: sqlagentuserrole, sqlagentreaderrole, sqlagentoperatorrole. This are the principals that can access the proxy.

2d. The account must have "logon as a batch job" privileges (check with secpol.msc)

4. Set SQL Server Agent service account to LocalSystem

5. If needed, run a job step under another account. Typically: a SQL Server account based on a Windows account: SQL Server Agent / Jobs / job / Steps / Edit / Advanced / Run as user,

- select proxy

- type SSIS package

- package source File system

If the "A required privilege is not held by the client" error occurs (from Microsoft website):

1. Set the SQL Server Agent service account in SQL Server Configuration Manager to the LocalSystem account.

2. Stop and then start the SQL Server Agent service.

3. Reset the SQL Server Agent service account in SQL Server Configuration Manager back to the original account.

4.Stop and then start the SQL Server Agent service.

From Marc at Stackoverflow:

So it boils down to really just two accounts:

one account is needed to run the SQL Server Agent Windows service - this is a Windows account on your machine / server which needs to have enough permissions to run the service, start and stop it - either use LocalSystem, Network Service, or whatever other Windows account you have to run services with

The other account would be the account to run your SQL Server Agent steps under - that's typically a SQL Server account (which could be based on a Windows account), and it needs enough privileges inside SQL Server to do its job, e.g. it needs access to the database objects and all. I would strive to have just one account for each app that runs the SQL Server jobs - makes life a whole lot easier!

From Influent at SQLTeam:

1. set ProtectionLevel to DontSaveSensitive in package properties in Visual Studio (using SSPI anyway so not sure it mattered)

2. created configuration file (had to alter path to config file in dtsx file since it's different on servers than in dev environment)

3. instead of importing the package into MSDB, set the job step Package Source to "File system" and pointed to dtsx file on local drive of server

4. set the Configurations tab of the job step to point to the dtsConfig file created in step 2

5. made sure the AD user kicking off the job was part of local server groups: Administrators, SQLServer2005DTSUser$SERVER, and SQLServer2005SQLAgent$SERVER (I eventually removed Administrator permissions because I only needed it to log to Windows Event Log)

6. made the associated SQL user to the user in step 5 part of the SQLAgentUserRole in the msdb database

7. made sure the local user assigned to the SQL Agent service was part of local server groups: Administrators, SQLServer2005DTSUser$SERVER, and SQLServer2005SQLAgent$SERVER

From xprog.blogspot.com:

1) Executed as user: DOMAIN\user. The process could not be created for step 1 of job 0xB013D0354C8CBD46B79E948740EF5441 (reason: 1314). The step failed.

The error 1314 is "A required privilege is not held by the client".

This message indicates that the SQL Server Service account doesn't have the required rights to switch the security context to the proxy account.

To fix it verify:

1. The proxy account have the "Act as part of the operating system" privilege.

2. The SQL Server Account is in the group

SQLServer2005MSSQLUser$$

3. The Proxy account is in the group

SQLServer2005DTSLUser$$

SQL Server Agent Account must have 'Adjust memory quotas for a process' (or 'Increase quotas' in pre-Windows 2003) privilege on SQL Server.

From FriggleFragle at MSDN:

an issue i found was that sql server agent and ssis had been changed to not use the local system account. this caused me to continually get the dreaded --> "A required privilege is not held by the client" error. i fought with it all afternoon and eventually updated the services to use the local system account and it worked. perhaps this will save some other as much time as i wasted.

Labels:

OS,

SQL,

SSIS

2 comments:

Measuring disk I/O speed

Disk thruput tester is a handy tool to test the sequential read and write performance of storage media, like harddisks and SSD. It supports configurating custom block sizes:

http://www.objectso.nl/

Using this tool, testing the read/write performance of 8KB, 16KB, 32KB, etc, sized blocks is a breeze. The size and location of the test file is also configurable.

Note: on the website the tool is called "disk throughput tester".

http://www.objectso.nl/

Using this tool, testing the read/write performance of 8KB, 16KB, 32KB, etc, sized blocks is a breeze. The size and location of the test file is also configurable.

Note: on the website the tool is called "disk throughput tester".

Labels:

Database,

Oracle,

OS,

SQL

1 comment:

Simple way to read Excel or Access data in SQL Server

-- list of available providers

exec master..xp_enum_oledb_providers

-- Three ways to read in Access data (file: c:\db1.mdb, tabel: TEST)

1a) Using openrowset with older MS Access version:

SELECT * FROM OPENROWSET('MSDASQL', 'Driver={Microsoft Access Driver (*.mdb)};Dbq=C:\db1.mdb;Uid=;pwd=;','SELECT * FROM TEST')

1b) For 2010 or more recent (*.accdb)

SELECT * FROM OPENROWSET('MSDASQL', 'DRIVER={Microsoft Access Driver (*.mdb, *.accdb)};Dbq=C:\db1.mdb;Uid=;pwd=;','SELECT * FROM MAANDEN')

2) Using opendatasource:

SELECT * FROM OPENDATASOURCE('Microsoft.Jet.OLEDB.4.0','Data Source=C:\db1.mdb;')...[TEST]

3) Using a linked server called TEST for 2010 or more recent:

EXEC sp_addlinkedserver

@server= N'TEST',

@srvproduct = N'MSDASQL',

@provider= N'MSDASQL',

@provstr= N'DRIVER={Microsoft Access Driver (*.mdb, *.accdb)};Dbq=C:\db1.mdb;Uid=;pwd=;'

-- Read in Excel (file: c:\employee.xls, worksheet: TEST)

SELECT * FROM OPENROWSET('MSDASQL', 'Driver={Microsoft Excel Driver (*.xls)};Dbq=C:\employee.xls;Uid=;pwd=;','SELECT * FROM [TEST$]')

-- Insert into text file

insert openrowset( 'Microsoft.ACE.OLEDB.12.0', 'Text;HDR=yes;Database=c:\tmp\', 'select * from test.csv') (testname, testaddress) select testname, testaddress from vw_test;

Note:

exec master..xp_enum_oledb_providers

-- Three ways to read in Access data (file: c:\db1.mdb, tabel: TEST)

1a) Using openrowset with older MS Access version:

SELECT * FROM OPENROWSET('MSDASQL', 'Driver={Microsoft Access Driver (*.mdb)};Dbq=C:\db1.mdb;Uid=;pwd=;','SELECT * FROM TEST')

1b) For 2010 or more recent (*.accdb)

SELECT * FROM OPENROWSET('MSDASQL', 'DRIVER={Microsoft Access Driver (*.mdb, *.accdb)};Dbq=C:\db1.mdb;Uid=;pwd=;','SELECT * FROM MAANDEN')

2) Using opendatasource:

SELECT * FROM OPENDATASOURCE('Microsoft.Jet.OLEDB.4.0','Data Source=C:\db1.mdb;')...[TEST]

3) Using a linked server called TEST for 2010 or more recent:

EXEC sp_addlinkedserver

@server= N'TEST',

@srvproduct = N'MSDASQL',

@provider= N'MSDASQL',

@provstr= N'DRIVER={Microsoft Access Driver (*.mdb, *.accdb)};Dbq=C:\db1.mdb;Uid=;pwd=;'

-- Read in Excel (file: c:\employee.xls, worksheet: TEST)

SELECT * FROM OPENROWSET('MSDASQL', 'Driver={Microsoft Excel Driver (*.xls)};Dbq=C:\employee.xls;Uid=;pwd=;','SELECT * FROM [TEST$]')

-- Insert into text file

insert openrowset( 'Microsoft.ACE.OLEDB.12.0', 'Text;HDR=yes;Database=c:\tmp\', 'select * from test.csv') (testname, testaddress) select testname, testaddress from vw_test;

Note:

- MS Access needs to be installed on the same server where SQL Server is installed

- Make sure that the use of ad hoc names is allowed:

exec sp_configure 'show advanced options', 1;

RECONFIGURE;

exec sp_configure 'Ad Hoc Distributed Queries', 1;

RECONFIGURE;

Possible error messages and solutions:

OLE DB provider "Microsoft.ACE.OLEDB.12.0" for linked server "(null)" returned message "Unspecified error"

Give read/write access to "everyone" on the server to the following folder:

C:\Users\<SQL Server Service Account>\AppData\Local\Temp

Make sure this is set on the server where the code is running, i.e. on the client PC where the code is executed within Management Studio.

Make sure this is set on the server where the code is running, i.e. on the client PC where the code is executed within Management Studio.

Labels:

SQL

No comments:

Truncate staging tables

Before creating a backup of the database, you could clean out all staging tables to safe some space in the backup and to reduce the time it takes to make the backup. You could use the following simple SQL-loop statement:

Note:

The PRINT-statement can be left out. It could help you debug the statement. Also the truncate statement does not work if there are foreign keys referencing the table.

EXEC sp_MSforeachtable "IF LEFT('?',11)='[dbo].[STG_' BEGIN PRINT '?' TRUNCATE TABLE ? END"The statement expects a strict naming convention: all staging table names should start with STG_ and this prefix should not be used for any other table types. Also make sure you do not have any "permanent" data in your staging environment.

Note:

The PRINT-statement can be left out. It could help you debug the statement. Also the truncate statement does not work if there are foreign keys referencing the table.

Labels:

Database,

SQL

No comments:

Return the size of pre-selected tables

A stored procedure I use a lot to get a resultset containing the size of a selection of tables in the database:

Note:

The insert of the total row can alternatively be written using the COMPUTE-clause on the main select statement.

CREATE procedure sp_TableSize asReplace the bold part to set the tables for which to return the size. After all records have been returned it adds a final total row to the set.

begin

SET ANSI_WARNINGS OFF

CREATE TABLE #temp

(

TABLENAME varchar(100),

ROWS int,

RESERVED varchar(25),

DATA varchar(25),

INDEX_SIZE varchar(25),

UNUSED varchar(25),

)

DECLARE @table nvarchar(100)

-- Select the tables for which to retrieve sizes

DECLARE cDWHTables cursor read_only for

SELECT name FROM sysobjects

WHERE LEFT(name, 4) in ('STG_','ODS_','DIM_','SYS_','FCT_','RPT_') OR

LEFT(name,3) = 'MD_'

OPEN cDWHTables

-- Store each record retrieved from sp_spaceused in temp table

FETCH NEXT FROM cDWHTables INTO @table

WHILE (@@fetch_status <> -1)

BEGIN

IF (@@fetch_status <> -2)

BEGIN

insert #temp exec sp_spaceused @table

END

FETCH NEXT FROM cDWHTables INTO @table

END

UPDATE #temp SET

reserved = left(reserved, len(reserved) - 3),

data = left(data, len(data) - 3),

index_size = left(index_size, len(index_size) - 3),

unused = left(unused, len(unused) - 3)

INSERT #temp

SELECT 'TOTAL' as tablename, sum(rows) as rows,

sum(convert(int,reserved)) as reserved,

sum(convert(int,data)) as data,

sum(convert(int,index_size)) as index_size,

sum(convert(int,unused)) as unused

FROM #temp

--Return the results as data set

SELECT

TABLENAME = tablename,

ROWS = convert(int,rows),

CONVERT(int,reserved) as RESERVED,

convert(int,data) as DATA,

convert(int,index_size) as INDEX_SIZE,

convert(int,unused) as UNUSED,

AVG_DATA = convert(numeric(18,2),case when rows>0 then convert(float, data) / convert(float, [rows]) else 0 end),

TOTAL = convert(int,data) + convert(int,index_size),

TOTAL_MB = (convert(int,data) + convert(int,index_size))/1024

FROM #temp

-- Clean up

DROP TABLE #temp

CLOSE cDWHTables

DEALLOCATE cDWHTables

end

Note:

The insert of the total row can alternatively be written using the COMPUTE-clause on the main select statement.

Labels:

Database,

SQL

No comments:

Backup to network share

You can use the following TSQL statement to backup a database to a network share in SQL Server 2000:

This backup file will also appear then in Enterprise Manager. Select Tools, Backup database and check the destination listbox.

To check if the backup is complete and readable:

Alternatively, using Enterprise Manager, you can add a backup device via management\backup and have in point to \\unc\folder\filename.bak. Right-click it, select "backup a database" and click "Ok".

Note:

It is not recommended to backup directly to a network share, but sometimes there is no alternative.

Note 2:

Setting the RETAINDAYS property to e.g. 5 days means it will be impossible to overwrite the backup file during the next 5 days! Beware of that!

BACKUP DATABASEThis will create a full backup each time it is executed. The RETAINDAYS=0 option makes sure that the previous backup will always get overwritten. Not the safest thing, but can be needed if you are in a tight spot regarding disk space.TO DISK = '\\unc\folder\filename.bak'

WITH RETAINDAYS=0, INIT

This backup file will also appear then in Enterprise Manager. Select Tools, Backup database and check the destination listbox.

To check if the backup is complete and readable:

RESTORE VERIFYONLY FROM DISK = '\\unc\folder\filename.bak'This way you can have more control over your backup procedure than using maintenance plans, e.g. by putting them in a Execute SQL Task in a DTS package.

Alternatively, using Enterprise Manager, you can add a backup device via management\backup and have in point to \\unc\folder\filename.bak. Right-click it, select "backup a database" and click "Ok".

Note:

It is not recommended to backup directly to a network share, but sometimes there is no alternative.

Note 2:

Setting the RETAINDAYS property to e.g. 5 days means it will be impossible to overwrite the backup file during the next 5 days! Beware of that!

Labels:

Database,

SQL

No comments:

Strange HAVING-clause behaviour

Say you want to return a resultset containing the last two days per week. First, construct the demo table:

From BOL:

"The HAVING clause is then applied to the rows in the result set that are produced by grouping. Only the groups that meet the HAVING conditions appear in the query output. You can apply a HAVING clause only to columns that also appear in the GROUP BY clause or in an aggregate function."

So, first the grouping, THEN an extra filtering on that set. How can filtering 7 records result in 28 records? Furthermore, the 28 records are not distinct records. Something you would expect when using a GROUP BY-clause...

Conclusion is that in case of a correlated having-clause query the having-clause is evaluated before the group by-clause.

CREATE TABLE TEST_TIME (WEEKCODE INT, ISODATE CHAR(8))The following SQL query will give the desired resultset:

INSERT INTO TEST_TIME VALUES (1, '20060101')

INSERT INTO TEST_TIME VALUES (1, '20060102')

INSERT INTO TEST_TIME VALUES (1, '20060103')

INSERT INTO TEST_TIME VALUES (2, '20060108')

INSERT INTO TEST_TIME VALUES (2, '20060109')

INSERT INTO TEST_TIME VALUES (2, '20060110')

INSERT INTO TEST_TIME VALUES (2, '20060111')

SELECT t.WEEKCODE, t.ISODATE FROM TEST_TIME tIt returns 4 records, as espected:

WHERE t.ISODATE >=

(

SELECT TOP 1 ISODATE FROM

(

SELECT TOP 2 ISODATE

FROM TEST_TIME t2

WHERE t.WEEKCODE = t2.WEEKCODE

ORDER BY ISODATE DESC

) t1

ORDER BY ISODATE ASC

)

ORDER BY t.WEEKCODE

1 20060102Just for fun, I tried the same thing using a GROUP BY and HAVING-clause like this:

1 20060103

2 20060110

2 20060111

SELECT t.WEEKCODE, t.ISODATE FROM TEST_TIME tFar less efficient, but one would expect it to return the same resultset. But it does not! In fact it returns 28 records:

GROUP BY t.WEEKCODE, t.ISODATE

HAVING t.ISODATE >=

(

SELECT TOP 1 ISODATE FROM

(

SELECT TOP 2 ISODATE

FROM TEST_TIME t2

WHERE t.WEEKCODE = t2.WEEKCODE

ORDER BY ISODATE DESC

) t1

ORDER BY ISODATE ASC

)

ORDER BY t.WEEKCODE

1 20060102That is even more than there are in the table! This is very strange behaviour. Stripping the HAVING-clause returns 7 records, as expected. But adding it results in a cartesian product of the 7 source records by the 4 top-2 records (2 for week 1 and 2 for week 2) resulting in 7x4=28 records.

1 20060102

1 20060102

1 20060102

1 20060102

1 20060102

1 20060102

1 20060103

1 20060103

1 20060103

1 20060103

1 20060103

1 20060103

1 20060103

2 20060110

2 20060110

2 20060110

2 20060110

2 20060110

2 20060110

2 20060110

2 20060111

2 20060111

2 20060111

2 20060111

2 20060111

2 20060111

2 20060111

From BOL:

"The HAVING clause is then applied to the rows in the result set that are produced by grouping. Only the groups that meet the HAVING conditions appear in the query output. You can apply a HAVING clause only to columns that also appear in the GROUP BY clause or in an aggregate function."

So, first the grouping, THEN an extra filtering on that set. How can filtering 7 records result in 28 records? Furthermore, the 28 records are not distinct records. Something you would expect when using a GROUP BY-clause...

Conclusion is that in case of a correlated having-clause query the having-clause is evaluated before the group by-clause.

Labels:

SQL

No comments:

Subscribe to:

Comments (Atom)